Poetoid Lyricam

Poetoid Lyricam is a camera that takes poems.

It consists of a Raspberry Pi, camera, thermal printer and batteries housed in the body of a 1970s Polaroid camera. The pictures it takes are fed to a neural net which generates captions, these are then mangled and reformatted to resemble a poem, which emerges from the printer on the back.

The video below includes a demonstration.

Description of the process and poetry When you point the camera at your subject and press the shutter button the green light on the back flashes rapidly for a short time while the image is captured and then less rapidly while the poem is “developed”. The poem that arrives soon after will not be of high poetic quality

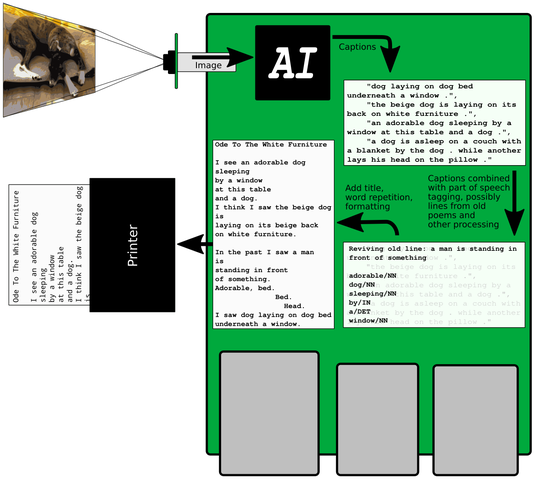

A diagram of how the poetry generation works

At EMF 2018 I did a talk on Lyricam, Dada, and my past and present experiments with text generation. The video of it can be viewed here, or below.

The Text

I was not prepared enough to learn the talk, so this is basically what I read out.

I’d like to talk to you about this thing I made, Poetoid Lyricam.

Before I start, a disclaimer in case there are any actual poets present. What this produces most resembles prose poetry or free verse, possibly also know as bad poetry. If there are any poets here I could be interested in collaborating on improving it’s poeticalness.

I’d like to demonstrate this now by taking a poem of you all. If anyone is concerned about being photographed, I promise the image is not kept after processing and there is no network enabled. If you’re still worried, hide your face now.

Demo

How it works

Hardware

- Raspberry Pi 3B+

- Until recently a Pi 3B but the serial port seems to have died

- Pi camera

- Nano Thermal Printer from Adafruit

- 2 18650 lithium ion batteries in series for 8ish V

- Pimoroni wide input shim

- Printer wants 5 to 9V

- Pi + shim wants 3 to 16V

Super Colour Swinger

- Probably from 1975

- This was not a good camera and the film is no longer made.

- But is has a lot of space inside, so I gutted it and filled it with electronics.

- I was able to retain the original shutter button, with locking mechanism. Which is handy.

This is the third version. The first I made for EMF 2016. It did its processing on a computer in my house, connecting to the WiFi to send the image and get the poem back. Since it was on 2.4GHz WiFi, it was very unreliable. So I decided it had to be standalone. My first attempt at this worked, but the poems were too repetitive and it was much too slow. This time I’ve rebuilt most of it from scratch and I think I’ve addressed both of those issues.

Before I talk about the intricacies of the software, I’d like to delve into history of how the project came about.

Why

1916

During the First World War a group of artist refugees gathered in Zurich. Their reaction to the horror going on around them was to abandon the usual forms of art and to embrace the absurd. They chose a name for their group by selecting a word at random from a French-German dictionary. That name was Dada.

A prominent member of the group was the Romanian poet Tristan Tzara. The Dadaists wrote a lot of manifestoes. In 1920 he wrote the Dada Manifesto On Feeble Love And Bitter Love in which he gave instructions for how to make a Dadaist poem.

TO MAKE A DADAIST POEM

- Take a newspaper.

- Take some scissors.

- Choose from this paper an article of the length you want to make your poem.

- Cut out the article.

- Next carefully cut out each of the words that makes up this article and put them all in a bag.

- Shake gently.

- Next take out each cutting one after the other.

- Copy conscientiously in the order in which they left the bag.

- The poem will resemble you.

- And there you are - an infinitely original author of charming sensibility, even though unappreciated by the vulgar herd.

This became known as the cut-up technique.

Several people across literature and music have employed the cut-up technique.

The American beat author William S Burroughs used it in several of his books after his friend and collaborator Brion Gysin rediscovered the technique after cutting a mount for a drawing with a Stanley blade on top of a pile of newspapers.

Thom Yorke used it in Radiohead’s Kid A album.

And also this chap.

Bowie video

I love how pleased he is with it. I think I’d have liked to have been David Bowie’s software developer.

At some point during all this, I was born.

Growing up I suppose I absorbed the sense of the nonsense of the Dadaists via its influence on this country’s tradition of absurd comedy. Spike Milligan, The Pythons, Vic and Bob.

Probably my first encounter with generating text came in the form of Mrs Hathaway’s Knickers. This was a children’s party game invented by my Grandfather. Each child was given a piece of paper with a noun or noun phrase written on it. The adult would start reading from a book, at some point they’d stop reading at a noun and point at a child, who would read their word or phrase. “Mrs Hathaway’s Knickers” for instance (Mrs Hathaway was one of my Mother’s teachers). This was hilarious.

Like many people here of my age, I learnt to program in BASIC on a microcomputer as a child. I had a BBC B. It’s over in the bar in fact, displaying Twitter. When I was about 13, my friend Peter Jones (not that one) and I collaborated on a program called ODE.

Demo ODE

The first line is of course based on the title of a poem by Poet Master Grunthos the Flatulent of the Azgoths of Kria, the second worst poets in the Universe. From the section on Vogon poetry in the Hitchhikers Guide to the Galaxy by Douglas Adams.

This is basically a more sophisticated version of Mrs Hathaway’s Knickers, as well as nouns it incorporates verbs, adjectives and present participles.

It consists of a lot of terrible buggy spaghetti code, but at its heart are two lines.

PRINT "ODE TO " + NOUN$ + " I " + VERB$ + " ONE MIDSUMMER MORNING"

PRINT "WHILE " + ING$ + " " + ART$ + " " + ADJECTIVE$ + " " + NOUN$

The two lines are templates with placeholder variables that are replaced by words or chunks of text selected at random from lists of the appropriate type. This is similar to the game Mad Libs.

With the right choice of templates and carefully crafted lists, this technique can be used to produce some interesting and amusing results. Much better than Ode, certainly.

factbot1 is a Twitter bot by Eric Drass which plays with the idea that people on Twitter, and generally, will believe plausible sounding facts. This bot dates back to before the current fake news disaster. You can hear more about that from him in stage B immediately after this.

It’s frequently less than plausible though.

Yoko Ono Bot by Rob Manuel off of b3ta combines templates based on Yoko Ono’s tweets and writings with text from several lists of things like foods, computers and celebrities.

Tracery is a Javascript library written by Kate Compton which uses grammars described in JSON to produce text in the same way.

Tracery is used by Cheap Bots Done Quick .com, created by V Buckenham, to allow anyone to make Twitter bots easily. Many have, and for me at least they offset some of the nasty stuff that makes Twitter unpleasant these days.

These are a couple of my favourites.

DUNSÖNs & DRAGGANs

Tasty Bargains!

Anyway, back to stuff I made.

I first encountered Markov chain generated text by playing with the Dissociated Press program built into the text editor Emacs. Which is weird in itself as I’m a Vi user.

Markov chains are built from existing bodies of text which are cut up, usually into single words. A graph is then created that encodes the likelihood that a word will be followed by another word. The graph can then be traversed randomly to build new text similar to the original and sort of grammatically correct.

It’s easier to show you.

Demo Markov Chains

The larger the body of text you feed in the better as that will lead to more variety in the output.

Sam R Cosgrave is a bot I made that uses Markov chains. It regularly searches Twitter for #haiku and records what it finds. It’s been running for several years now and has accumulated nearly 900,000 haikus. It uses those to build three Markov chains, one per line of poem.

Inspire Ration is another bot I wrote using Markov chains. This one feeds on thousands of inspirational quote type text I scraped from the internet.

Markov chain generated text tends to be incoherent, but it will occasionally spit out a gem.

So I eventually got bored of Markov chains and moved on to AI. I started by playing with character recurrent neural networks in the Torch framework. These can be used in a similar way to Markov chains in that you feed them a large body of text and they learn how to produce text that looks a bit like the input.

I had a laugh producing bizarre new scripts for Friends and so on.

Then I found NeuralTalk2. This is a Neural Network that takes an image as input and produces a caption. It’s trained on the MS COCO dataset, a set of about 300 thousand photos with five captions each.

This is what I used to create the poems on the first version of Lyricam.

In November 2016 I took part in NaNoGenMo, National Novel Generation Month. Similar to NaNoWriMo, the aim is to spend a month generating a novel of 50,000 words.

The result of this is A.I.A.I. an AI’s take on the film A.I. I extracted 5036 stills from the movie and had NeuralTalk2 produce a caption for each. Then I formatted the captions into sentences, possibly joining two. Then paragraphs from a set of sentences, then chapters from a number of paragraphs. Chapter titles are the most common five or more letter word in the chapter that’s not previously been a chapter title. Finally I formatted it through LaTeX to produce a PDF. My friend Libby was kind enough to get one printed for me. I’ll read you a sample.

The nameless are located on the slippery side of the obscure. A man is taking a picture of a mirror; lighted man with train bike looking bed at window. A man is taking a picture of himself in a mirror; there is a man in a restaurant using the phone. A woman is holding a cat in a kitchen. A boy is sitting at the table looking at his cell phone, or a man and a woman are holding a dog.

How it works again

So, back to the camera.

Torch doesn’t work on Raspberry Pi, so I had to switch to Tensorflow and the im2txt model. Again trained on MS COCO.

When the shutter button is pressed, an image is captured and stored in a Python array. It’s then passed to the neural net and 4 captions are returned.

NeuralTalk2 had an option called “temperature” which you could use to adjust the sensibleness of the captions it produced. Im2txt attempts to always produce the most accurate captions possible. So I modified it to remove the clever search code and to introduce a random element.

The captions are cleaned up and run through the EngTagger library that tags each word with its part of speech. Nouns, verbs, adjectives, prepositions, etc. Then there is a random chance that it will break the line on a verb, conjunction (and, or), or preposition (for, of).

It will occasionally repeat some of the nouns as a list.

Finally the result is sent to the printer.

That’s it. As I said at the start, I’d love to improve the quality of the poetry, and am up for collaborating with people on that. There’s a lot of scope for employing various techniques for transforming the captions. I’d also like to investigate changing the neural net to be more poetic. The only reason I’ve not done that so far is that training from scratch will probably take weeks on my hardware.

I’ll be wandering around for the rest of the event, so if you have any questions or want your poem taken, please ask.

Thank you.

Further Reading, Viewing, and Listening

Hardware

Cut-up Technique

- Gaga for Dada

- BBC documentary on Dada from 2016 presented by Jim Moir (aka Vic Reeves).

- Dada Manifesto on Feeble Love and Bitter Love

- By Tristan Tzara. Includes How to Make a Dadaist Poem.

- Burroughs 101 - This American Life

- Mostly a BBC documentary presented by Iggy Pop about William Burroughs. Stuff about cut-ups is in part 2.

- Burroughs, W.S. and Gysin, B. (1978) The Third Mind

- Book detailing Gysin and Burroughs’ experiments with the cut-up technique with many examples. Pretty easy to find a PDF on Google.

The Bowie video I showed in the talk

An article on the cut-up technique’s influence on musicians

- An article about the Cut-Up Method

- Mentions more recent stuff like Cassetteboy and Chris Morris.

Ode

When I say that my BBC B is in the bar showing Twitter, I’m referring to Twitbeeb.

Various versions of the Ode source are available on Github.

There’s a web based version of Ode.

Or you can play with the original BBC version in the incredible jsbeeb by Matt Godbolt.

Twitter (and other) Bots

- factbot1 and write up by Eric Drass / Shardcore.

- His talk that I refer to can be seen on youtube.

- Yoko Ono Bot by Rob Manuel of B3ta.

- He also did clickbaitrobot, which I like. He did an interesting talk about his bots.

- Tracery by Kate Compton.

- You can learn and play with it on the tutorial page.

- Cheap Bots, Done Quick! by V Buckenham.

- The source of many great Twitter bots.

DUNSÖNs & DRAGGANs by @notinventedhere.

Sam R Cosgrave by me, and write up.

Inspire Ration also by me.

A.I.

- NeuralTalk2

- The image captioning model I used for the original version of Lyricam, no longer developed.

- A.I.A.I. my novel for NaNoGenMo 2016

- You can look at the source and read the result.

For the Friends script I used this char-rnn code trained on transcripts of the first series of Friends.

- im2txt

- The Tensorflow image captioning model I use in the new version of Lyricam.

I modified im2txt to remove the beam search code, instead it use a simpler caption building method with a random element. Every time you run a neural net inference the result is a list of all the words in its vocabulary in order of likelihood of each being the next word. Occasionally instead of using the best word, it will pick another word from further down the list.

I also made it talk directly to the Pi camera using its Python API. This saves vital milliseconds encoding and writing a JPEG, and then reading and decoding it again.

I run the Tensorflow as a daemon as it takes about a minute to start and load the model. The image capture and captioning is triggered by a connection to a network socket, the result is returned over the socket in JSON.